【Microsoft Azure 的1024种玩法】五十五.Azure speech service之通过JavaScript快速实现文本转换为语音

【Microsoft Azure 的1024种玩法】五十五.Azure speech service之通过JavaScript快速实现文本转换为语音

【简介】

文本转语音可使用语音合成标记语言 (SSML) 将输入文本转换为类似人类的合成语音,本篇文档主要介绍了如何通过JavaScript 的语音SDK实现文本转换为语音的实践操作

【操作步骤】

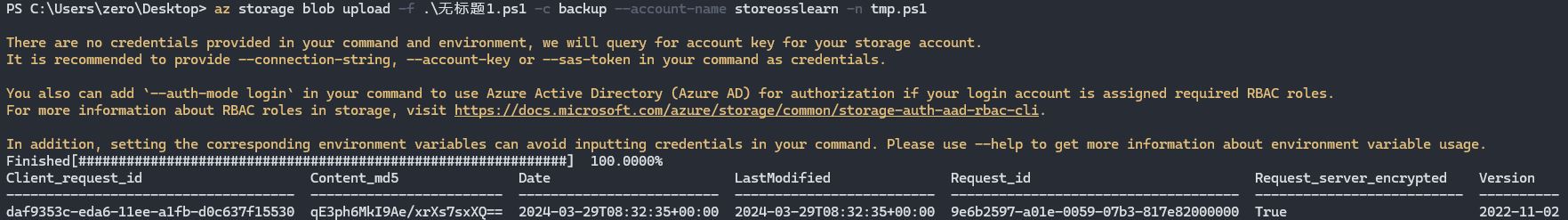

一.配置语音 SDK集成环境

- 通过如下命令下载安装语音服务中的JavaScript SDK包

yarn add microsoft-cognitiveservices-speech-sdk

2.同时创建一个“yuyin“的目录以及一个”index.js"的文件(主要用于编写speech-sdk代码)

二.配置调试语音服务SDK

1.将以下代码复制到我们创建的index.js当中:

(function() {

"use strict";

var sdk = require("microsoft-cognitiveservices-speech-sdk");

var readline = require("readline");

var key = "YourSubscriptionKey";

var region = "YourServiceRegion";

var audioFile = "YourAudioFile.wav";

const speechConfig = sdk.SpeechConfig.fromSubscription(key, region);

const audioConfig = sdk.AudioConfig.fromAudioFileOutput(audioFile);

// The language of the voice that speaks.

speechConfig.speechSynthesisVoiceName = "en-US-JennyNeural";

// Create the speech synthesizer.

var synthesizer = new sdk.SpeechSynthesizer(speechConfig, audioConfig);

var rl = readline.createInterface({

input: process.stdin,

output: process.stdout

});

rl.question("Enter some text that you want to speak >\n> ", function (text) {

rl.close();

// Start the synthesizer and wait for a result.

synthesizer.speakTextAsync(text,

function (result) {

if (result.reason === sdk.ResultReason.SynthesizingAudioCompleted) {

console.log("synthesis finished.");

} else {

console.error("Speech synthesis canceled, " + result.errorDetails +

"\nDid you set the speech resource key and region values?");

}

synthesizer.close();

synthesizer = null;

},

function (err) {

console.trace("err - " + err);

synthesizer.close();

synthesizer = null;

});

console.log("Now synthesizing to: " + audioFile);

});

}());

2.并在index.js 中,将 YourSubscriptionKey 替换为语音资源密钥,将 YourServiceRegion 替换为语音资源区域。

(function() {

"use strict";

var sdk = require("microsoft-cognitiveservices-speech-sdk");

var readline = require("readline");

var key = "e68024063a4f74be08902ac71329082e6"; // 语音资源密钥

var region = "EastUS"; // 语音资源地域

var audioFile = "YourAudioFile.wav"; // 语音资源文件

const speechConfig = sdk.SpeechConfig.fromSubscription(key, region);

const audioConfig = sdk.AudioConfig.fromAudioFileOutput(audioFile);

// The language of the voice that speaks.

speechConfig.speechSynthesisVoiceName = "en-US-JennyNeural";

// Create the speech synthesizer.

var synthesizer = new sdk.SpeechSynthesizer(speechConfig, audioConfig);

var rl = readline.createInterface({

input: process.stdin,

output: process.stdout

});

rl.question("Enter some text that you want to speak >\n> ", function (text) {

rl.close();

// Start the synthesizer and wait for a result.

synthesizer.speakTextAsync(text,

function (result) {

if (result.reason === sdk.ResultReason.SynthesizingAudioCompleted) {

console.log("synthesis finished.");

} else {

console.error("Speech synthesis canceled, " + result.errorDetails +

"\nDid you set the speech resource key and region values?");

}

synthesizer.close();

synthesizer = null;

},

function (err) {

console.trace("err - " + err);

synthesizer.close();

synthesizer = null;

});

console.log("Now synthesizing to: " + audioFile);

});

}());

替换完毕以后如图所示

三.使用Node将语音合成到文件

1.在Vscode终端中,执行node程序,开始将语音合成到文件:

node.exe SpeechSynthesis.js

执行完毕后,如下图所示:

2.我们按照如下步骤打开我们语音合成的文件

3.如下图所示,我们就可以直接打开听一下我们语音合成的文件,至此,我们就成功使用语音合成的SDK成功合成了一个语音文件了

本文是原创文章,采用 CC BY-NC-ND 4.0 协议,完整转载请注明来自 慧眸

评论

匿名评论

隐私政策

你无需删除空行,直接评论以获取最佳展示效果